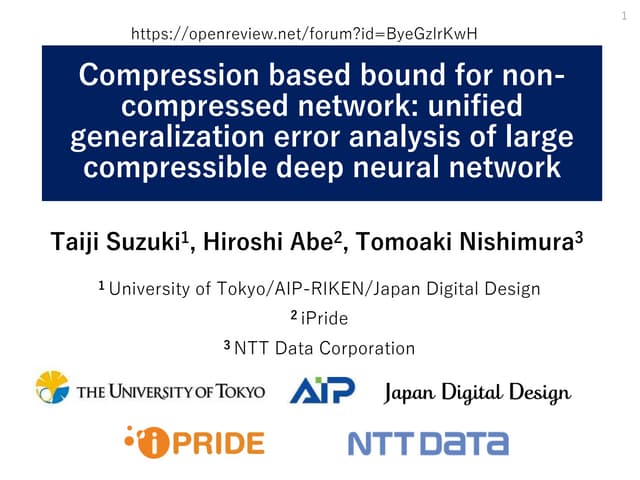

Iclr2020: Compression based bound for non-compressed network

By A Mystery Man Writer

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network - Download as a PDF or view online for free

1) The document presents a new compression-based bound for analyzing the generalization error of large deep neural networks, even when the networks are not explicitly compressed.

2) It shows that if a trained network's weights and covariance matrices exhibit low-rank properties, then the network has a small intrinsic dimensionality and can be efficiently compressed.

3) This allows deriving a tighter generalization bound than existing approaches, providing insight into why overparameterized networks generalize well despite having more parameters than training examples.

Neural material (de)compression – data-driven nonlinear dimensionality reduction

YK (@yhkwkm) / X

Co-clustering of multi-view datasets: a parallelizable approach

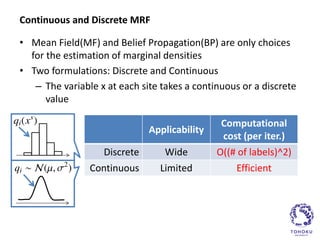

Discrete MRF Inference of Marginal Densities for Non-uniformly Discretized Variable Space

Higher Order Fused Regularization for Supervised Learning with Grouped Parameters

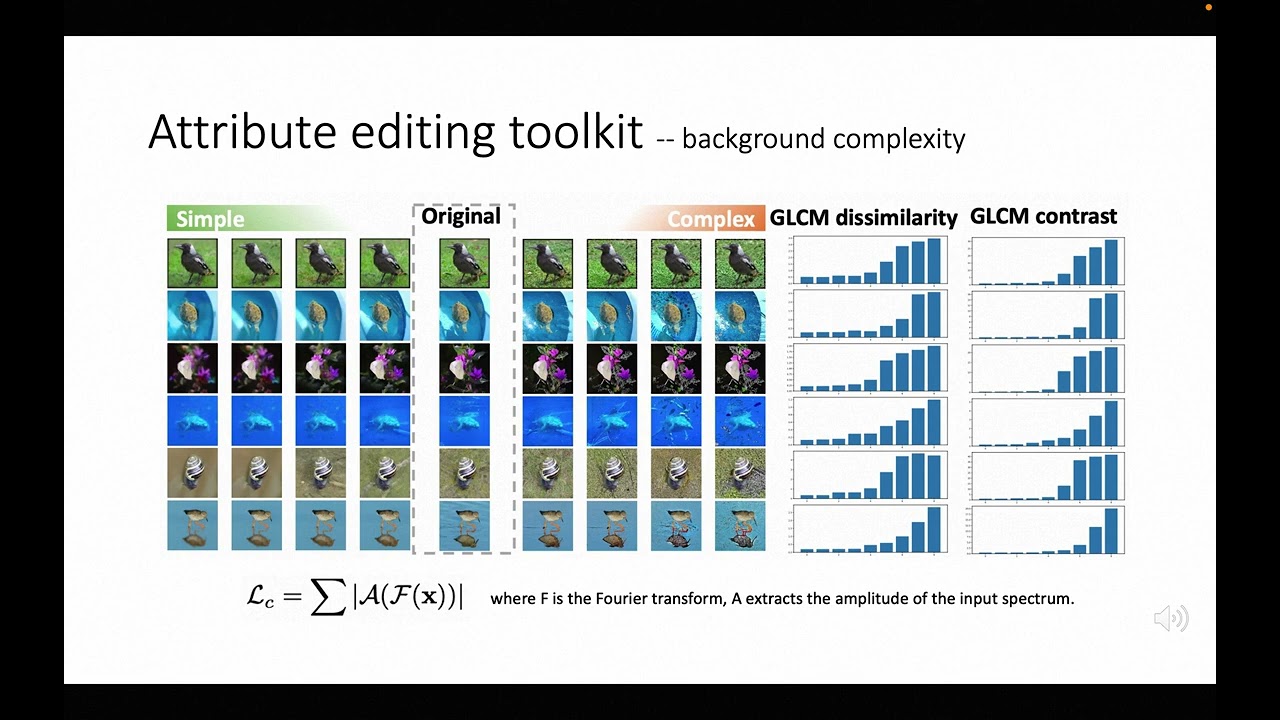

CVPR 2023

ICLR2021 (spotlight)] Benefit of deep learning with non-convex noisy gradient descent

ICLR2021 (spotlight)] Benefit of deep learning with non-convex noisy gradient descent

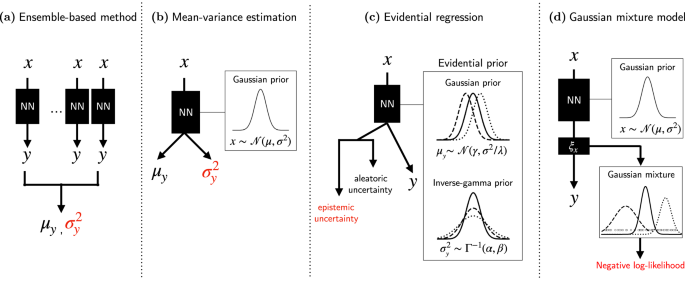

Single-model uncertainty quantification in neural network potentials does not consistently outperform model ensembles

Adversarial Neural Pruning with Latent Vulnerability Suppression

NeurIPS2020 (spotlight)] Generalization bound of globally optimal non convex neural network training: Transportation map estimation by infinite dimensional Langevin dynamics

ICML 2022

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

- Medical Compression Non Elastic Support Foam Lined Padded – Still Me Inc

- Touchscreen Copper Fiber Compression Women Men Non slip Full - Temu

- Women's Compression and Non-Binding Socks

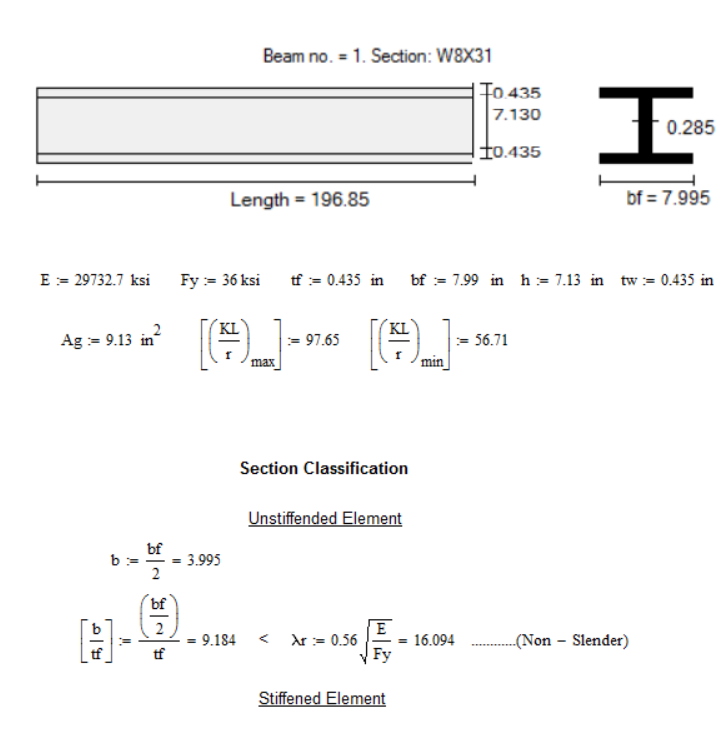

- Compression capacity of an I-shaped steel section (Non Slender

- BSN Medical Elastocrepe® Non-Adhesive Elastic Compression Bandages