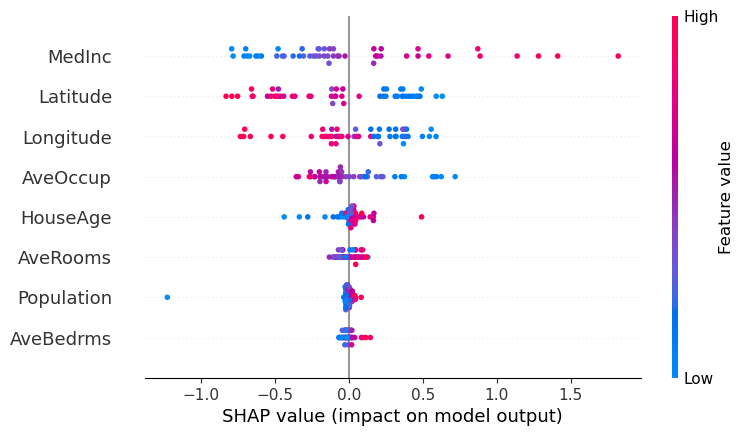

Using SHAP Values to Explain How Your Machine Learning Model Works, by Vinícius Trevisan

By A Mystery Man Writer

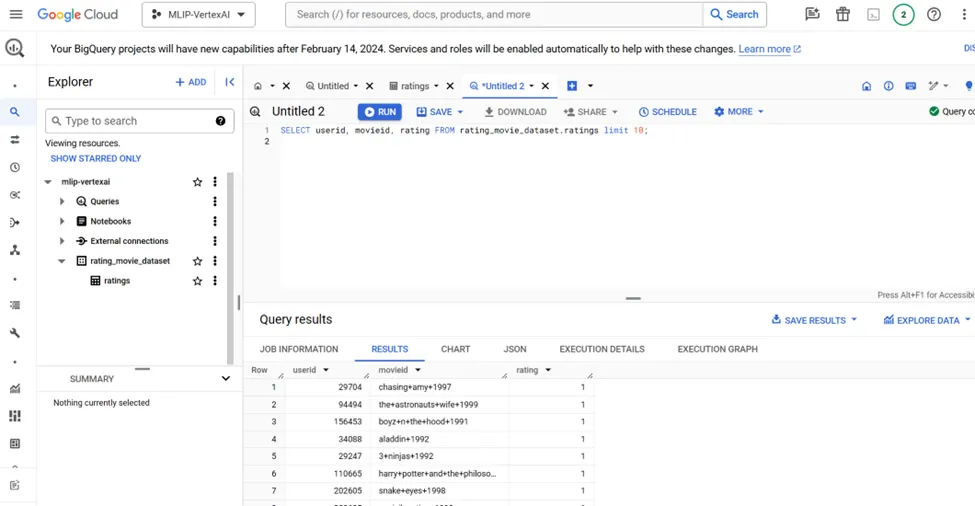

Is it correct to put the test data in the to produce the shapley values? I believe we should use the training data as we are explaining the model, which was configured

Interpreting ROC Curve and ROC AUC for Classification Evaluation, by Vinícius Trevisan

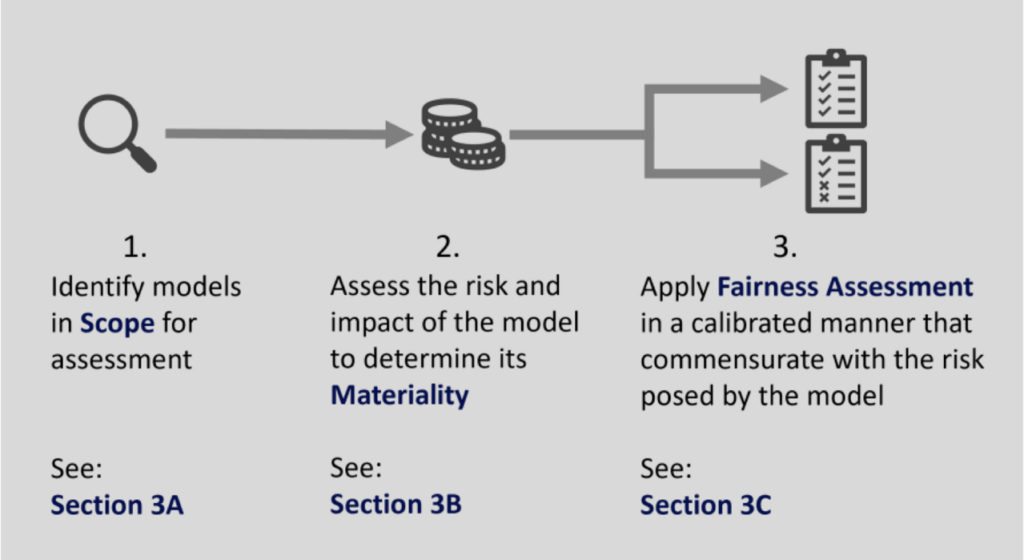

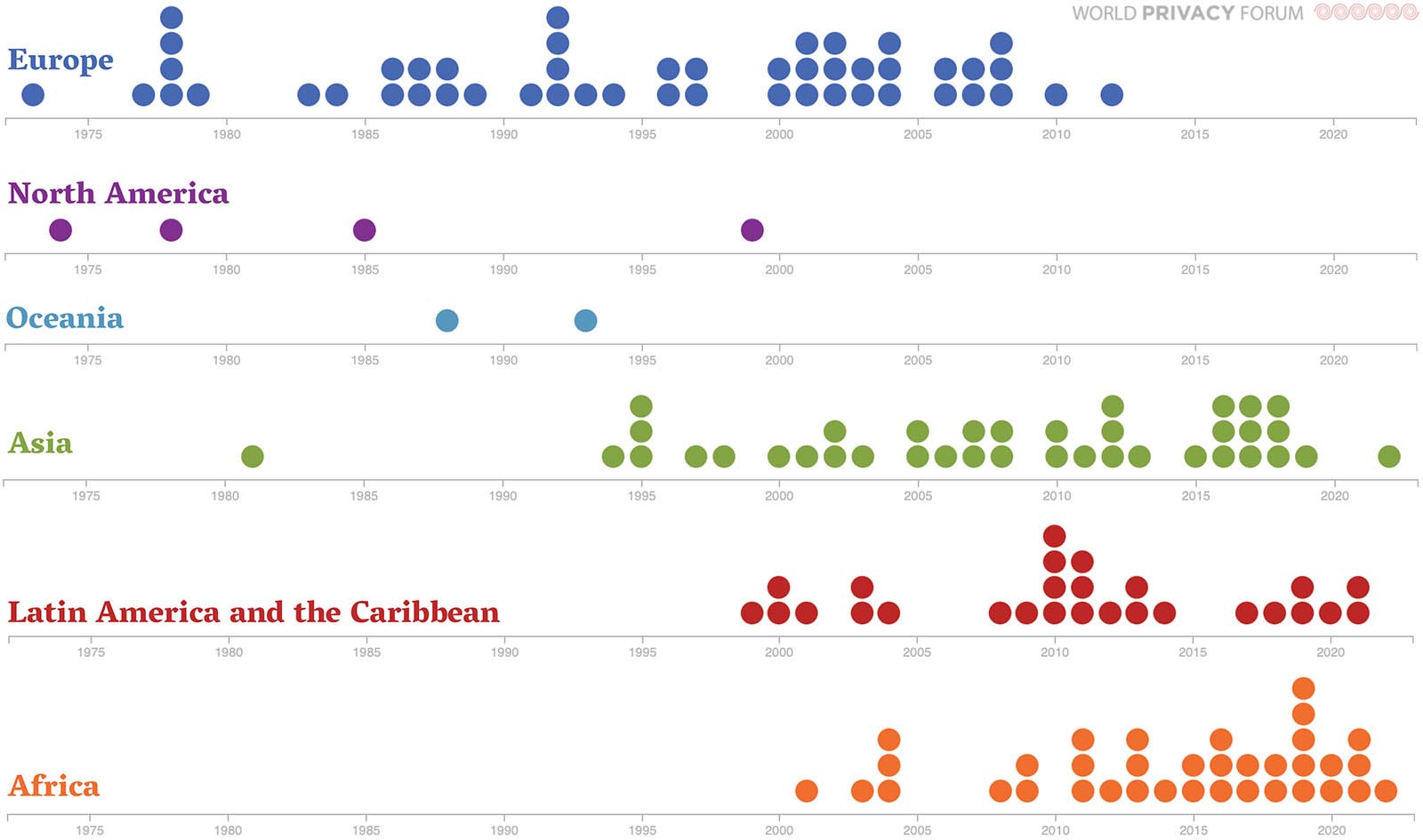

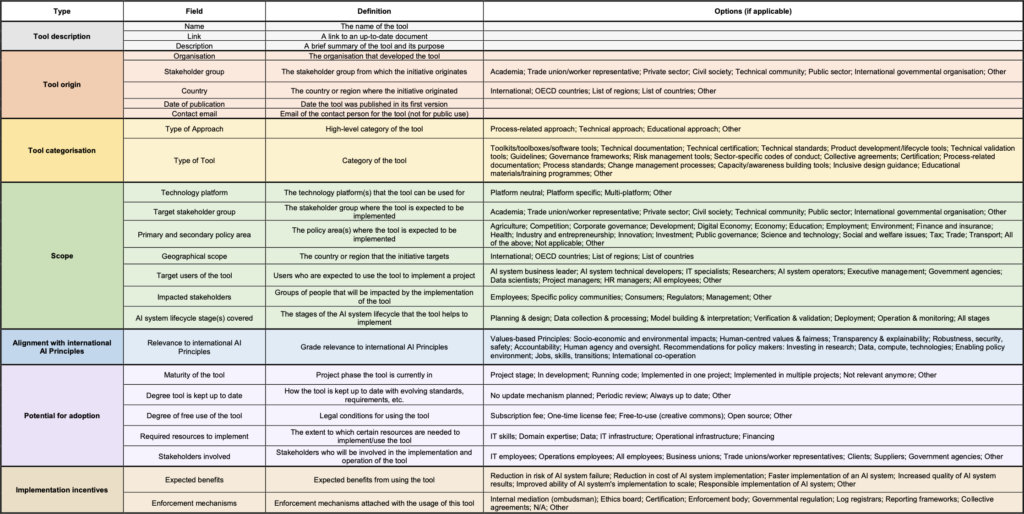

New Report: Risky Analysis: Assessing and Improving AI Governance Tools

Is it correct to put the test data in the to produce the shapley values? I believe we should use the training data as we are explaining the model, which was configured

New Report: Risky Analysis: Assessing and Improving AI Governance Tools

List: explainability, Curated by Galkampel

Introduction to Explainable AI (Explainable Artificial Intelligence or XAI) - 10 Senses

Using SHAP Values to Explain How Your Machine Learning Model Works, by Vinícius Trevisan

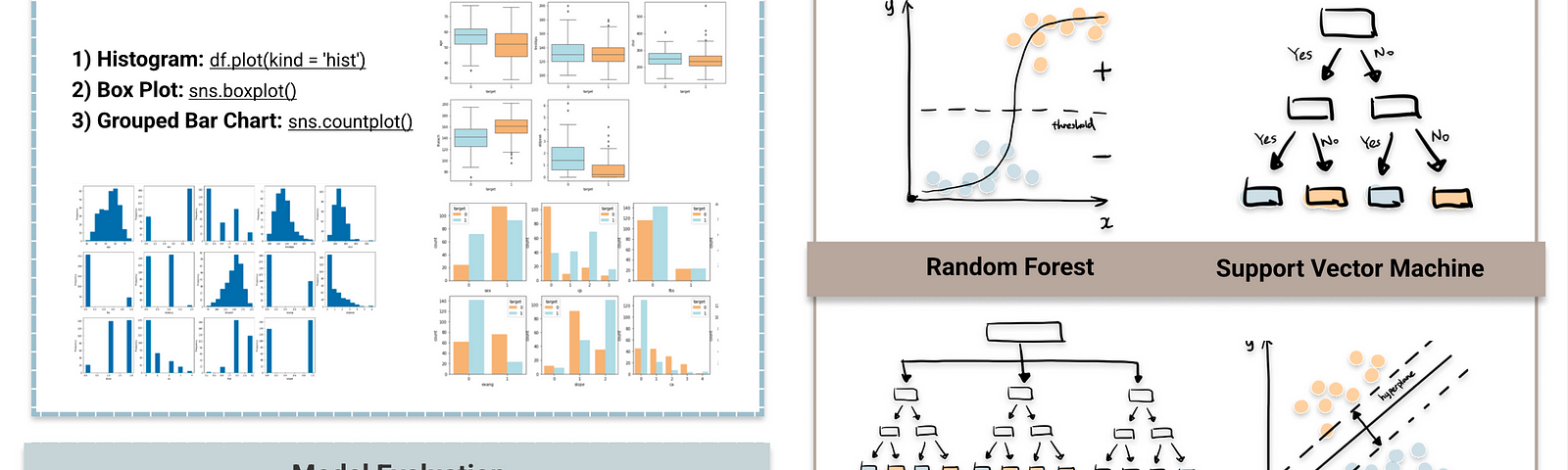

List: Data science, Curated by Bodil Elbrink

The most insightful stories about Explainable Ai - Medium

Is your ML model stable? Checking model stability and population drift with PSI and CSI, by Vinícius Trevisan

List: Data Science, Curated by SRI VENKATA SATYA AKHIL MALLADI

Top stories published by Towards Data Science in 2022

Target-encoding Categorical Variables, by Vinícius Trevisan

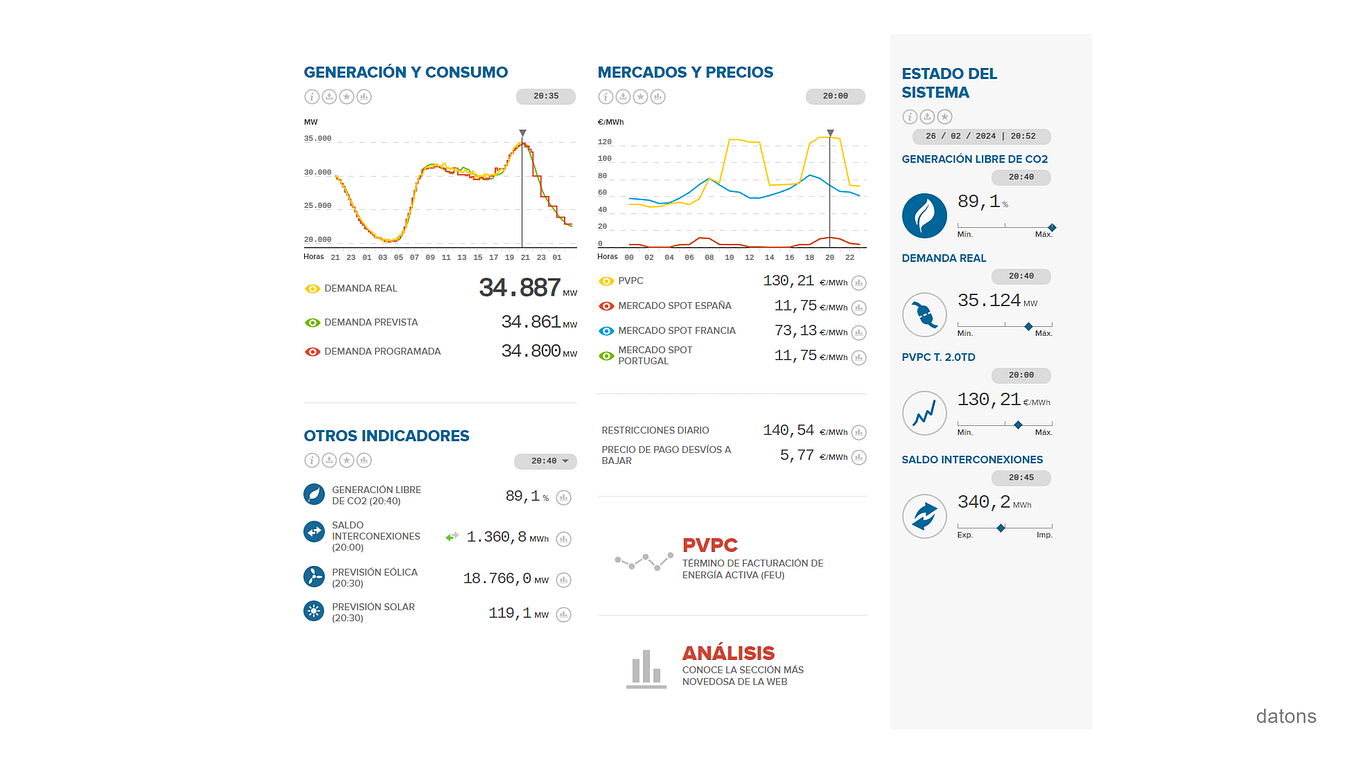

New Report: Risky Analysis: Assessing and Improving AI Governance Tools

- SHapley Additive exPlanations or SHAP : What is it ?

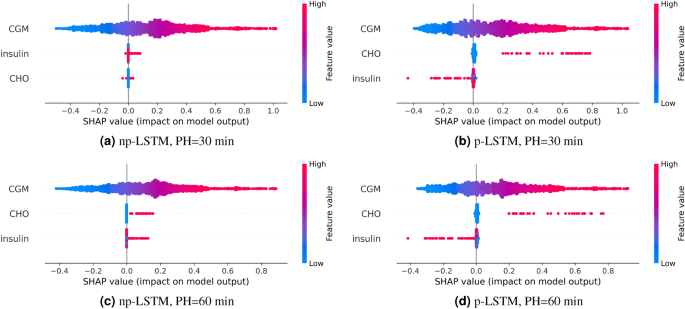

- The importance of interpreting machine learning models for blood glucose prediction in diabetes: an analysis using SHAP

- Shape Names - Explore the List of All Shapes in English

- Melissa & Doug Shape Sorting Cube - Classic Wooden Toy With 12 Shapes - Kids Shape Sorter Toys For Toddlers Ages 2+

- Identify Shapes - Grade 1 - ArgoPrep

- PANOEGSN Women High Waist Capri Pants Summer Casual

- Tummy Control Underwear for Women High Waisted Shapewear Panties Seamless Slimming Girdle Shaping Body Shaper

- Light Bras Teenager Cropped Bras Girls Sports Underclothes Underwear For Teens Cami Bra Student Padded Training Adjustable Wireless Vest Big Bra Tube

- Lululemon Pop It Off Crossbody In Asphalt Grey/rhino Grey

- Bonds Women's Everyday Seamless Bikini 4 Pack - Neutral